A research team has experimentally calculated the Jones polynomial based on the quantum simulation of braided Majorana zero modes. The research team determined the Jones polynomials of different links through simulating the braiding operations of Majorana fermions. This study was published in Physical Review Letters.

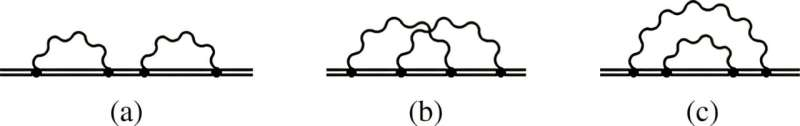

Link or knot invariants, such as the Jones polynomials, serve as a powerful tool to determine whether or not two knots are topologically equivalent. Currently, there is a lot of interest in determining Jones polynomials as they have applications in various disciplines, such as DNA biology and condensed matter physics.

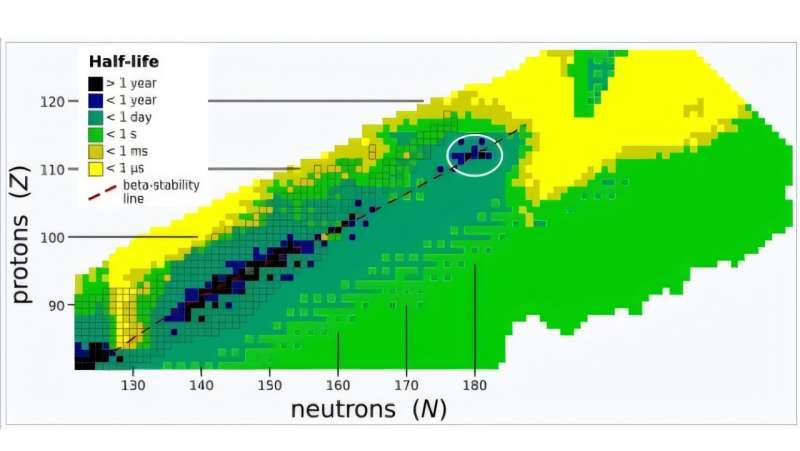

Unfortunately, even approximating the value of Jones polynomials falls within the #P-hard complexity class, with the most efficient classical algorithms requiring an exponential amount of resources. Yet, quantum simulations offer an exciting way to experimentally investigate properties of non-Abelian anyons and Majorana zero modes (MZMs) are regarded as the most plausible candidate for experimentally realizing non-Abelian statistics.

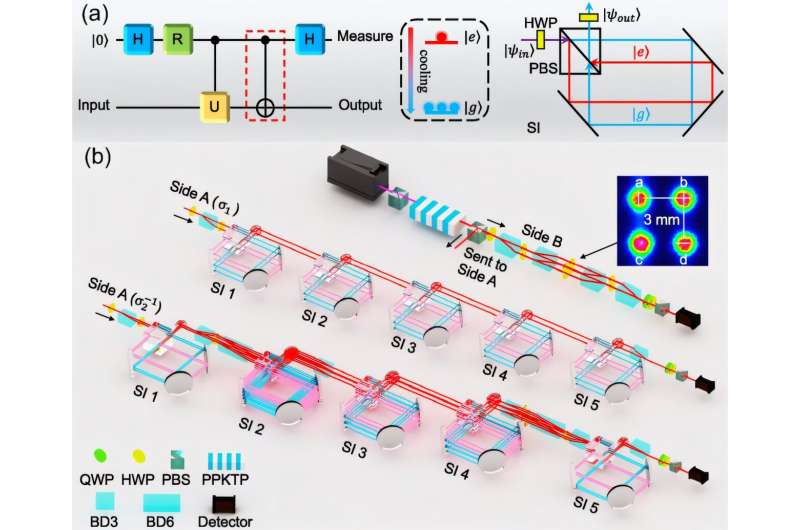

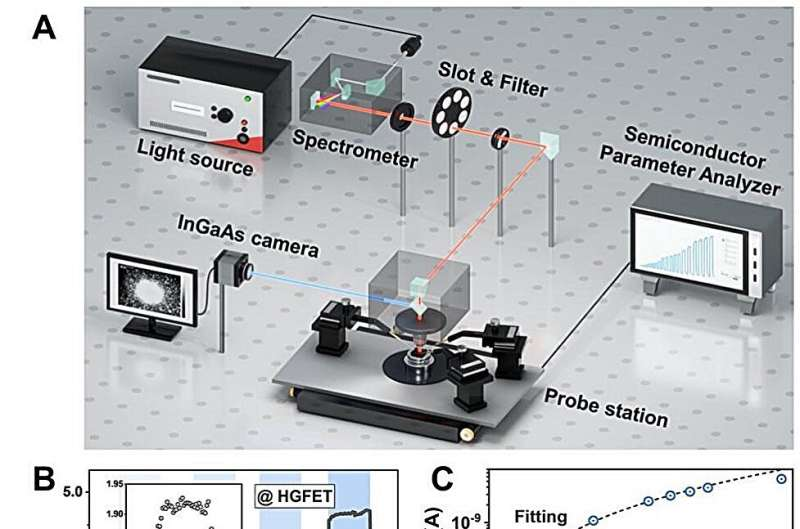

The team used a photonic quantum simulator that employed two-photon correlations and nondissipative imaginary-time evolution to perform two distinct MZM braiding operations that generate anyonic worldlines of several links. Based on this simulator, the team conducted a series of experimental studies to simulate the topological properties of non-Abelian anyons.

They successfully simulated the exchange operations of a single Kitaev chain MZM, detected the non-Abelian geometric phase of MZMs in a two-Kitaev chain model, and further extended to high dimensions -semion zeroth mode, studying their braiding process which was immune to local noise and maintained the conservation of quantum contextual resources.

Based on this work, the team expanded the previous single-photon encoding method to dual-photon spatial methods, utilizing coincidence counting of dual photons for encoding. This significantly increased the number of quantum states that can be encoded.

Meanwhile, by introducing a Sagnac interferometer-based quantum cooling device, the dissipative evolution had been successfully transformed into a nondissipative evolution, which enhanced the device’s capability to recycle photonic resources, thus contributing to achieving multi-step quantum evolution operations. These techniques greatly improved the capability of the photonic quantum simulator and laid a solid technical foundation for the simulation of braiding Majorana zero modes in three Kitaev models.

The team demonstrated that their experimental setup could faithfully realize the desired braiding evolutions of MZMs, as the average fidelity of quantum states and braiding operation was above 97%.

By combining different braiding operations of Majorana zero modes in the three Kitaev chain models, the research team simulated five typical topological knots, which gave rise to the Jones polynomials of five topologically distinct links, further distinguishing between topologically inequivalent links.

Such an advance can greatly contribute to the fields of statistical physics, molecular synthesis technology and integrated DNA replication, where intricate topological links and knots emerge frequently.

More information: Jia-Kun Li et al, Photonic Simulation of Majorana-Based Jones Polynomials, Physical Review Letters (2024). DOI: 10.1103/PhysRevLett.133.230603

Journal information: Physical Review Letters

Provided by University of Science and Technology of China

![Sketch of shear banding (top left) and vorticity banding (top right) as proposed by [18]. For shear banding, the rheological curve 𝜏 (𝛾˙) is single-valued but non-monotonic (bottom left). For vortex banding it is the 𝛾˙(𝜏) curve which is single valued and non-monotonic (bottom right). Credit: The European Physical Journal E (2024). DOI: 10.1140/epje/s10189-024-00444-5 Investigating the flow of fluids with non-monotonic, ‘S-shaped’ rheology](https://scx1.b-cdn.net/csz/news/800a/2024/investigating-the-flow.jpg)