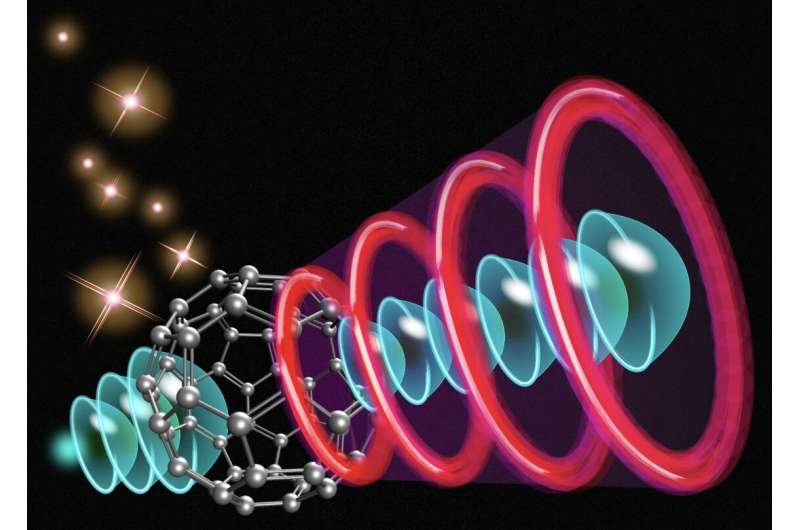

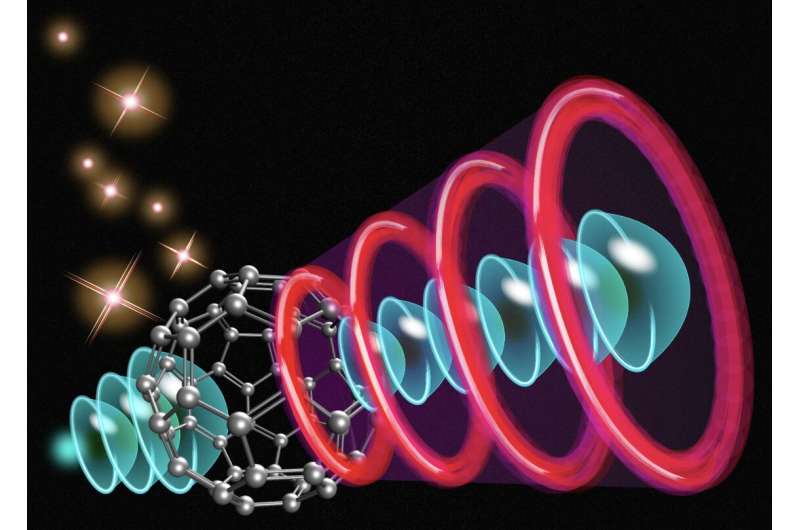

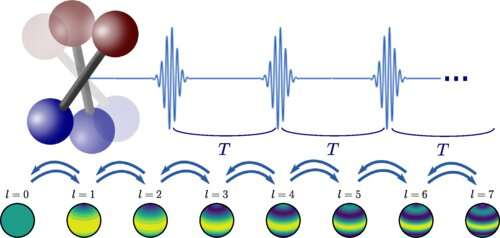

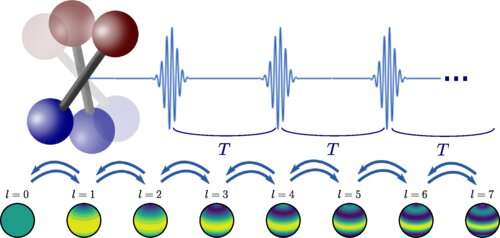

Gigahertz femtosecond lasers are suited to enhance and regulate laser machining quality to engineer the physicochemical properties of materials. Materials scientists seek to understand the laser-material interactions by gigahertz femtosecond lasers, although the method is complex due to the associated ablation dynamics.

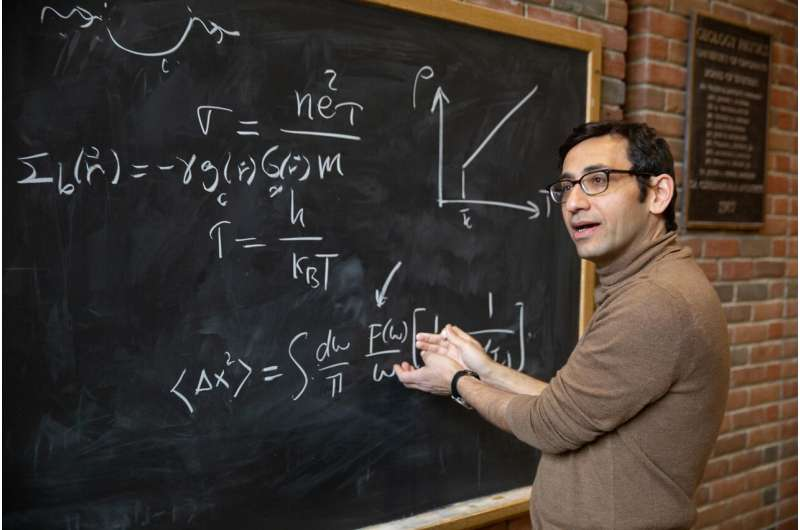

In a new report now published in Science Advances, Minok Park and a team of scientists in laser technologies and mechanical engineering at the University of California, Berkeley, studied the ablation dynamics of copper using gigahertz femtosecond bursts via time-resolved scattering imaging, emission imaging and emission spectroscopy.

Researchers have combined several methods to reveal the process of Gigahertz femtosecond bursts, which rapidly removed molten copper from an irradiated spot, for material ejection. The process of material ejection stopped after burst irradiation due to the limited amounts of remnant matter to provide insights into the mechanisms of complex ablation triggered via Gigahertz femtosecond bursts that are employed to select optimal laser conditions in cross-cutting processes, nano-/micro-fabrication and spectroscopy.

Gigahertz and femtosecond laser ablation

Laser ablation is a process of removing material from surfaces via the interaction of high-power lasers with significant impact across energy harvesting and storage, biomedicine, optoelectronics and spectroscopy. Materials scientists have achieved significant capacities to offer a direct, one-step, chemical-free pathway for material machining and ablation sampling by using ultrafast, femtosecond laser ablation. The process is suited to precisely regulate the ablation features.

In this study, Park and colleagues developed a variety of methods to examine real-time laser ablation dynamics. They studied the ablation of copper with a gigahertz femtosecond laser pulse and compared the outcomes to femtosecond pulse ablation. The combined methods resulted in the fast removal of molten liquid material, while halting material removal after burst irradiation. The researchers obtained direct insights into the dynamics and dominant mechanism of gigahertz ablation with femtosecond pulses.

The ultrafast laser experiments

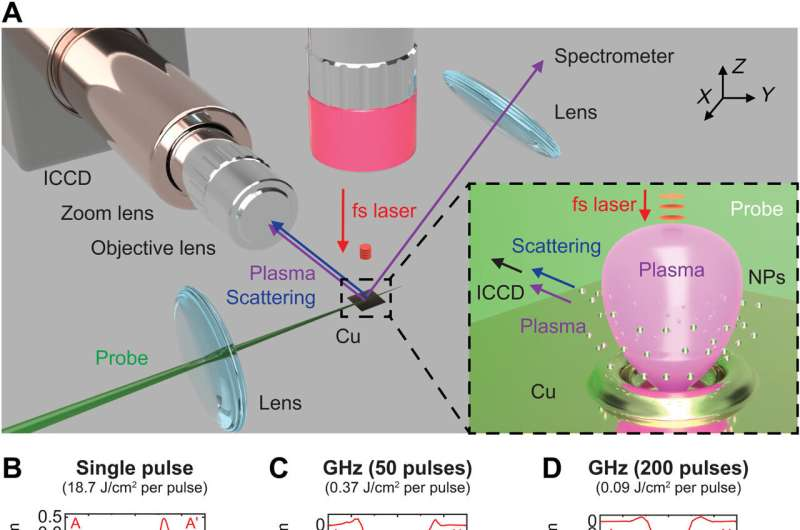

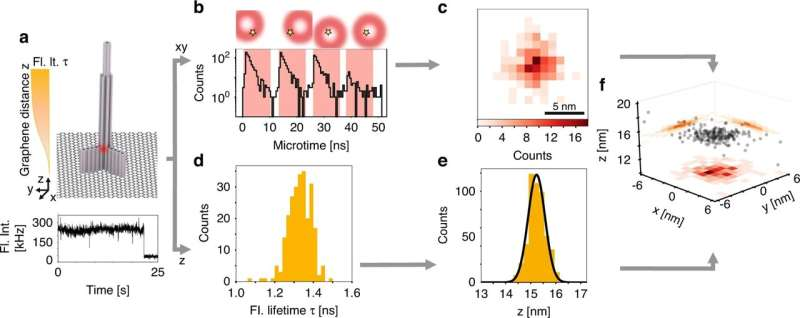

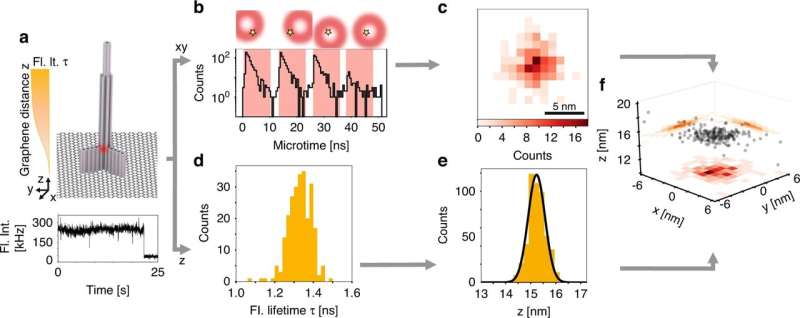

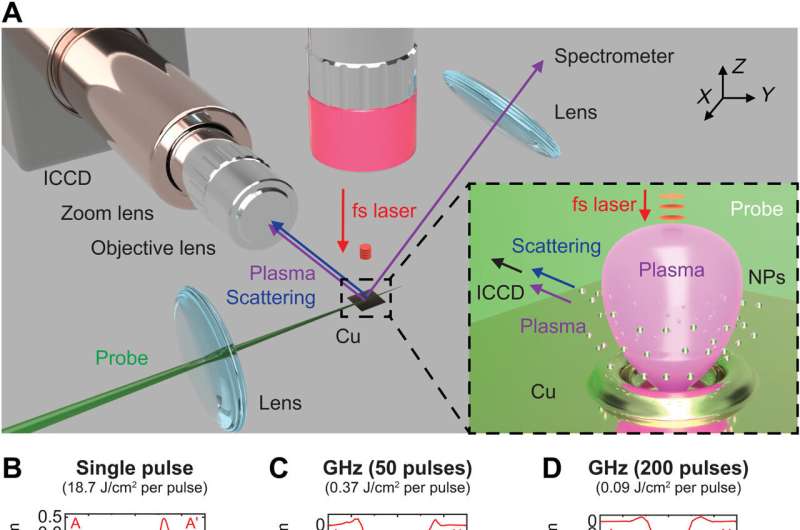

During the experiments, the team used an optical system to investigate the ablation mechanisms of copper with a single femtosecond laser pulse and gigahertz femtosecond bursts under atmospheric pressure. Using time-resolved scattering and emission images, the researchers visualized light emitting and non-emitting species. They characterized the crater morphology with white light interferometry and scanning electron microscopy to ablate a pristine copper surface to a depth of 500 nm. The scientists noted the appearance of irregular, resolidified structures on the irradiated spot. The ablation efficiency of the gigahertz bursts improved by manifolds compared to single-pulse irradiation.

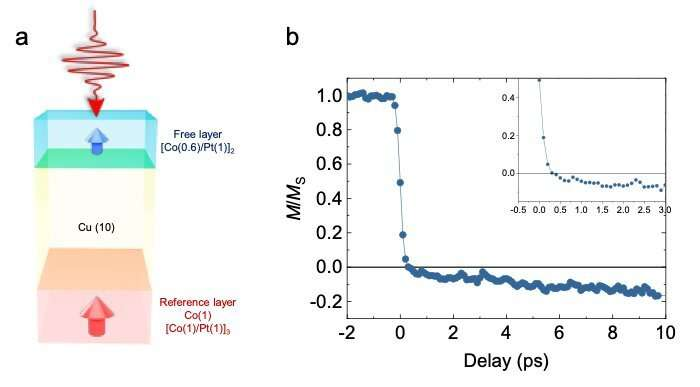

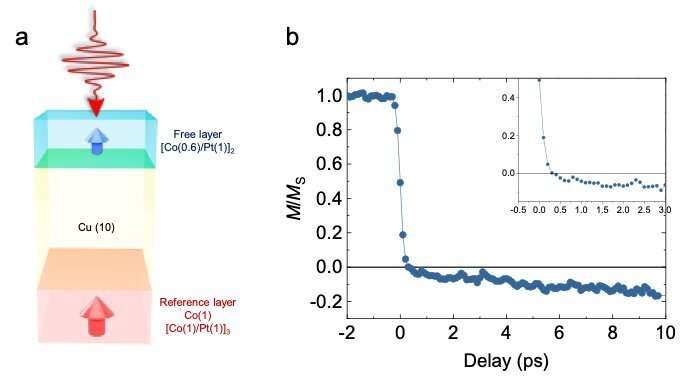

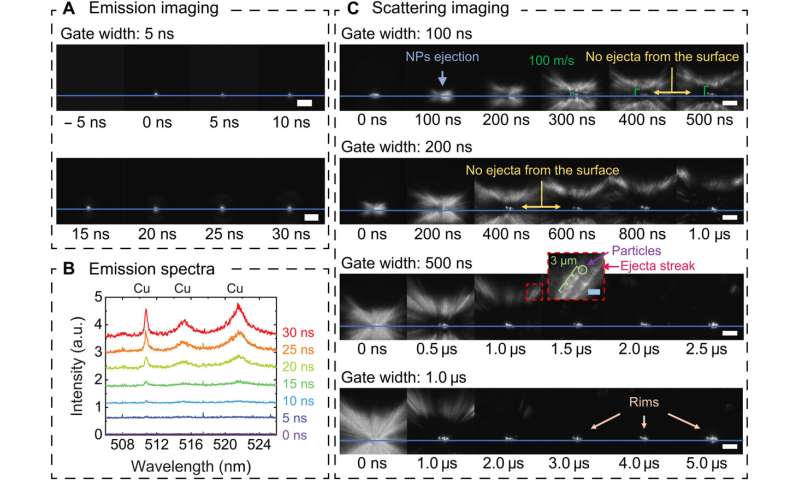

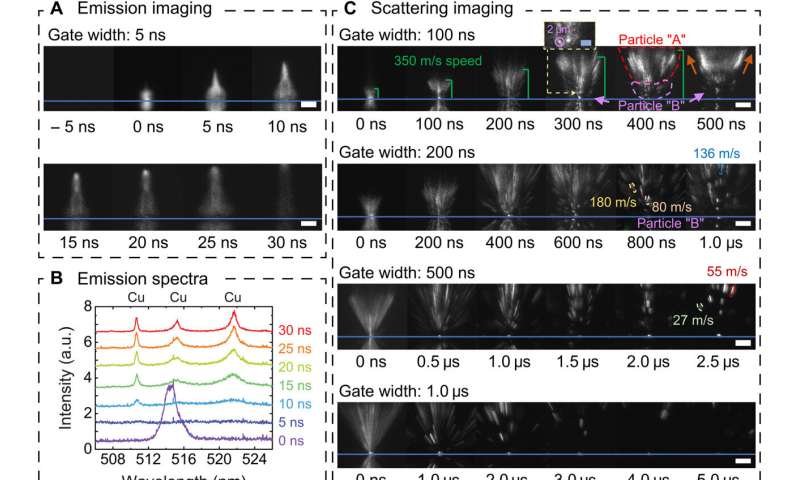

GHz fs burst ablation with 200 pulses. Time-resolved (A) emission imaging, (B) optical emission spectroscopy, and (C) scattering imaging to investigate ablation dynamics by GHz fs laser with 200 pulses at a fluence of 18.7 J/cm2 (0.09 J/cm2 per pulse, 155 ns total irradiation time), across different time scales. Scattering images were captured for 100 ns, 200 ns, 500 ns, and 1 μs, respectively. The blue lines represent the target Cu surface. White scale bars, 50 μm; blue scale bars, 10 μm. Credit: Science Advances (2023). DOI: 10.1126/sciadv.adf6397

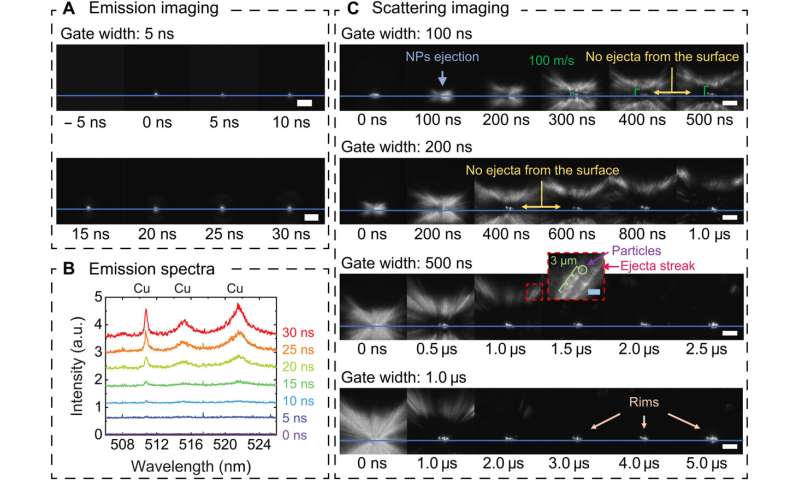

GHz fs burst ablation with 200 pulses. Time-resolved (A) emission imaging, (B) optical emission spectroscopy, and (C) scattering imaging to investigate ablation dynamics by GHz fs laser with 200 pulses at a fluence of 18.7 J/cm2 (0.09 J/cm2 per pulse, 155 ns total irradiation time), across different time scales. Scattering images were captured for 100 ns, 200 ns, 500 ns, and 1 μs, respectively. The blue lines represent the target Cu surface. White scale bars, 50 μm; blue scale bars, 10 μm. Credit: Science Advances (2023). DOI: 10.1126/sciadv.adf6397 Single-pulse fs laser irradiation. Time-resolved (A) emission imaging, (B) optical emission spectroscopy, and (C) scattering imaging showing the ablation dynamics at a fluence of 18.7 J/cm2, across different time scales. a.u., arbitrary units. Scattering images were acquired for varying ICCD gate widths of 100 ns, 200 ns, 500 ns, and 1 μs, respectively. The blue lines in these images represent the Cu target surface, and images below the lines are mirror reflections from the polished Cu surface. White scale bars, 50 μm; blue scale bars, 10 μm. Credit: Science Advances (2023). DOI: 10.1126/sciadv.adf6397

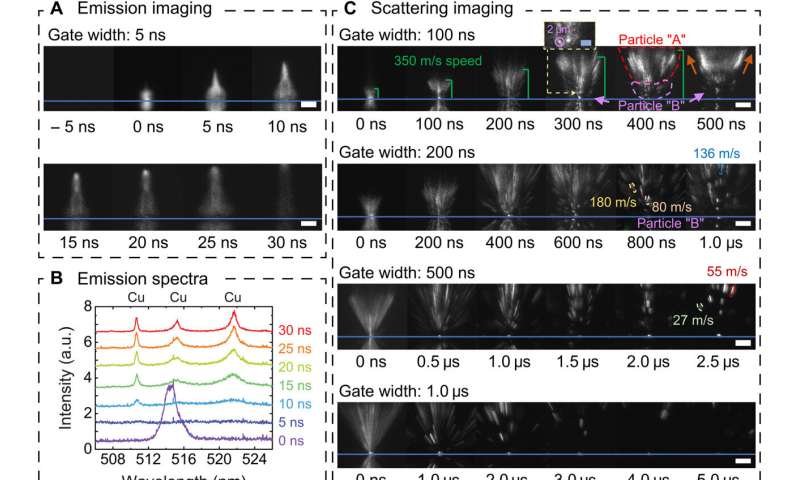

Single-pulse fs laser irradiation. Time-resolved (A) emission imaging, (B) optical emission spectroscopy, and (C) scattering imaging showing the ablation dynamics at a fluence of 18.7 J/cm2, across different time scales. a.u., arbitrary units. Scattering images were acquired for varying ICCD gate widths of 100 ns, 200 ns, 500 ns, and 1 μs, respectively. The blue lines in these images represent the Cu target surface, and images below the lines are mirror reflections from the polished Cu surface. White scale bars, 50 μm; blue scale bars, 10 μm. Credit: Science Advances (2023). DOI: 10.1126/sciadv.adf6397 GHz fs burst ablation with 50 pulses. Time-resolved (A) emission imaging, (B) optical emission spectroscopy, and (C) scattering imaging showing ablation dynamics and mechanisms at a fluence of 18.7 J/cm2 (0.37 J/cm2 per pulse, 38-ns dwell time). Scattering images were acquired for 100 ns, 200 ns, 500 ns, and 1 μs, respectively. The blue lines show the target Cu surface. White scale bars, 50 μm. Credit: Science Advances (2023). DOI: 10.1126/sciadv.adf6397

GHz fs burst ablation with 50 pulses. Time-resolved (A) emission imaging, (B) optical emission spectroscopy, and (C) scattering imaging showing ablation dynamics and mechanisms at a fluence of 18.7 J/cm2 (0.37 J/cm2 per pulse, 38-ns dwell time). Scattering images were acquired for 100 ns, 200 ns, 500 ns, and 1 μs, respectively. The blue lines show the target Cu surface. White scale bars, 50 μm. Credit: Science Advances (2023). DOI: 10.1126/sciadv.adf6397 GHz fs burst ablation with 200 pulses. Time-resolved (A) emission imaging, (B) optical emission spectroscopy, and (C) scattering imaging to investigate ablation dynamics by GHz fs laser with 200 pulses at a fluence of 18.7 J/cm2 (0.09 J/cm2 per pulse, 155 ns total irradiation time), across different time scales. Scattering images were captured for 100 ns, 200 ns, 500 ns, and 1 μs, respectively. The blue lines represent the target Cu surface. White scale bars, 50 μm; blue scale bars, 10 μm. Credit: Science Advances (2023). DOI: 10.1126/sciadv.adf6397

GHz fs burst ablation with 200 pulses. Time-resolved (A) emission imaging, (B) optical emission spectroscopy, and (C) scattering imaging to investigate ablation dynamics by GHz fs laser with 200 pulses at a fluence of 18.7 J/cm2 (0.09 J/cm2 per pulse, 155 ns total irradiation time), across different time scales. Scattering images were captured for 100 ns, 200 ns, 500 ns, and 1 μs, respectively. The blue lines represent the target Cu surface. White scale bars, 50 μm; blue scale bars, 10 μm. Credit: Science Advances (2023). DOI: 10.1126/sciadv.adf6397 Single-pulse fs laser irradiation. Time-resolved (A) emission imaging, (B) optical emission spectroscopy, and (C) scattering imaging showing the ablation dynamics at a fluence of 18.7 J/cm2, across different time scales. a.u., arbitrary units. Scattering images were acquired for varying ICCD gate widths of 100 ns, 200 ns, 500 ns, and 1 μs, respectively. The blue lines in these images represent the Cu target surface, and images below the lines are mirror reflections from the polished Cu surface. White scale bars, 50 μm; blue scale bars, 10 μm. Credit: Science Advances (2023). DOI: 10.1126/sciadv.adf6397

Single-pulse fs laser irradiation. Time-resolved (A) emission imaging, (B) optical emission spectroscopy, and (C) scattering imaging showing the ablation dynamics at a fluence of 18.7 J/cm2, across different time scales. a.u., arbitrary units. Scattering images were acquired for varying ICCD gate widths of 100 ns, 200 ns, 500 ns, and 1 μs, respectively. The blue lines in these images represent the Cu target surface, and images below the lines are mirror reflections from the polished Cu surface. White scale bars, 50 μm; blue scale bars, 10 μm. Credit: Science Advances (2023). DOI: 10.1126/sciadv.adf6397

Visualizing the outcome

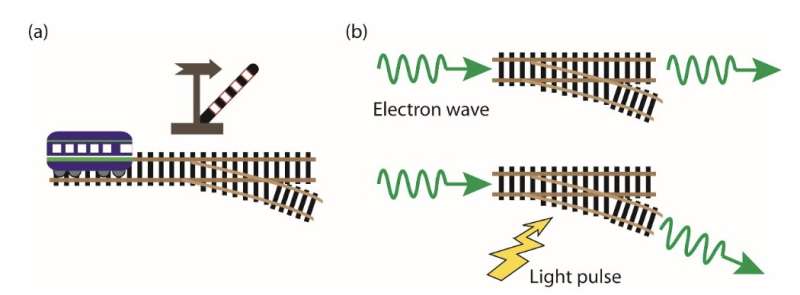

The research team observed time-resolved images, emission spectra and scattering images to investigate the ablation dynamics of a single-pulse femtosecond laser on a copper surface. The images revealed the ejection of two different particle types from the substrate including those released after different timescales: (1) after a 0–200 nanoseconds delay, and (2) those ejected between 300 nanoseconds to 4 microseconds.

The researchers explored time-resolved emission imaging and spectroscopy alongside images of ablated plumes induced via gigahertz bursts composed of 50 pulses. They noted spherically shaped copper plasmas for a period of 30 nanoseconds during the experiments.

Laser-ablation dynamics

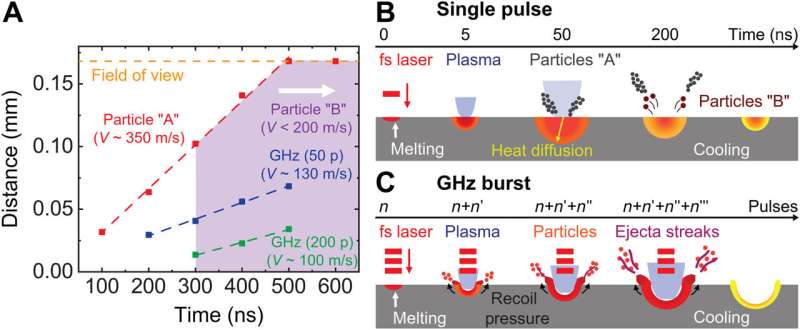

After a time period of 200 nanoseconds, the team did not observe ejecta at the center of the laser-matter interaction zone; indicating that the target was not ablated further. This behavior distinctly differed from the dynamics of single-pulse ablation.

The team devised two contributing mechanisms to the underlying process of material ejection, including (1) the vaporization of materials at the center, and (2) the ejection of liquid form the molten pool edge via fast, radially outward fluid motion, to recoil pressure exerted by vaporization. While the copper nanoparticles were expelled from the edge of the molten pool, a limited amount of liquid remained freezing on the crater surface, which they verified using scanning electron microscopy.

Comparative laser-ablation dynamics

The scientists used time-resolved emission imaging, emission spectroscopy and scattering images of ablation, driven by gigahertz femtosecond laser bursts. When they released the scattering images at a timescale later than 300s, the ejecta showed how the irradiation spot cooled down to inhibit materials removal.

The researchers compared the two experimental conditions and further studied the early ablation dynamics of copper driven by gigahertz bursts to note distinctly different ablation dynamics of a gigahertz burst driven with 200 pulses, compared to the gigahertz burst with 50 pulses. The outcomes provided direct confirmation of the different mechanisms of gigahertz-directed laser-induced ablation when compared to single-pulse irradiation.

Outlook

In this way, Minok Park and colleagues observed the ablation dynamics of copper by using single femtosecond laser pulses and gigahertz bursts with 50–200 pulses via multimodal probing methods. The single-pulse femtosecond laser irradiation produced two types of particles with different ejection speeds at different timescales.

The outcomes provide insights to comprehensively understand ablation mechanisms underlying gigahertz femtosecond bursts that are critical to explore a variety of applications across laser processing, machining, printing and spectroscopic diagnostics.

More information: Minok Park et al, Mechanisms of ultrafast GHz burst fs laser ablation, Science Advances (2023). DOI: 10.1126/sciadv.adf6397

Jan Kleinert et al, Ultrafast laser ablation of copper with ~GHz bursts, Laser Applications in Microelectronic and Optoelectronic Manufacturing (LAMOM) XXIII (2018). DOI: 10.1117/12.2294041

Journal information: Science Advances

© 2023 Science X Network