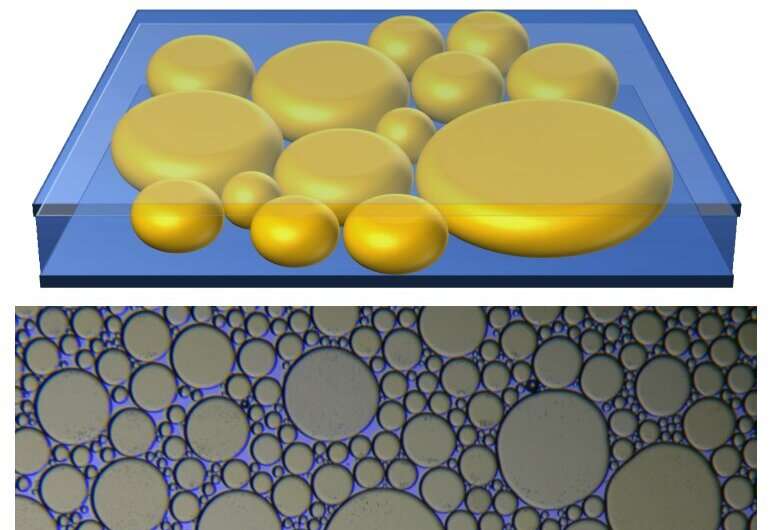

The shifting, scintillating pattern you can see when you stack two slightly misaligned window screens is called moiré. A similar interference effect occurs when scientists stack two-dimensional crystals with mismatched atomic spacings. Moiré superlattices display exotic physical properties that are absent in the layers that make up the patterns. These properties are rooted in the quantum nature of electrons.

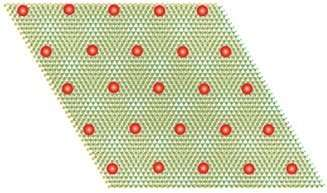

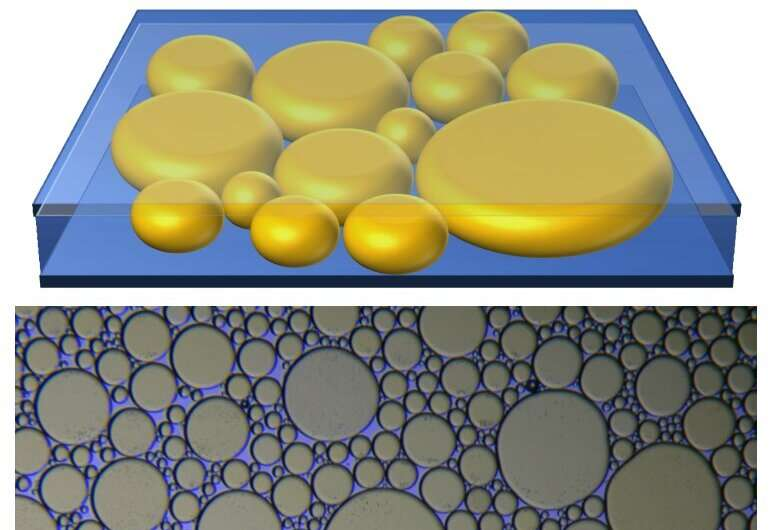

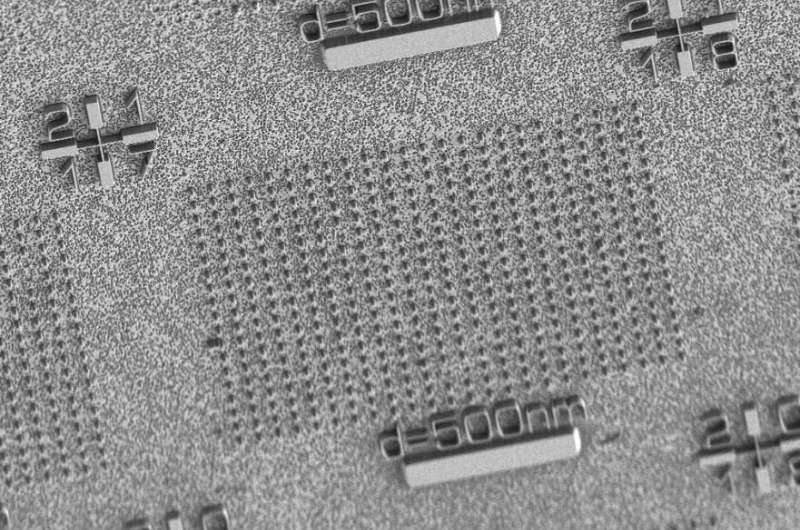

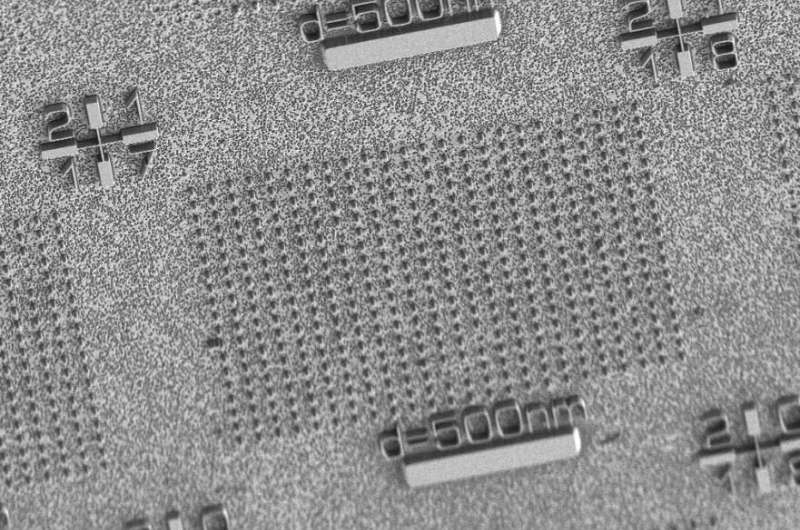

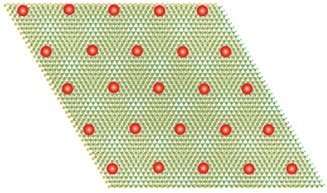

Researchers have discovered a new property in the moiré superlattices formed in crystals made of tungsten diselenide/tungsten disulfide(WSe2/WS2). In these two-dimensional crystals, the interactions between electrons become so strong that electrons “freeze” and form an ordered array.

WSe2/WS2 moiré superlattices turn out to be an optimal playground for tuning the interactions between electrons. The stronger these interactions, the more prominent the quantum mechanical nature of solid materials. This allows exotic states of matter like unconventional superconductivity to form.

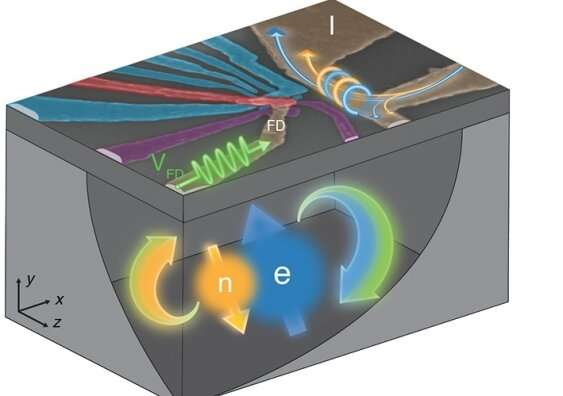

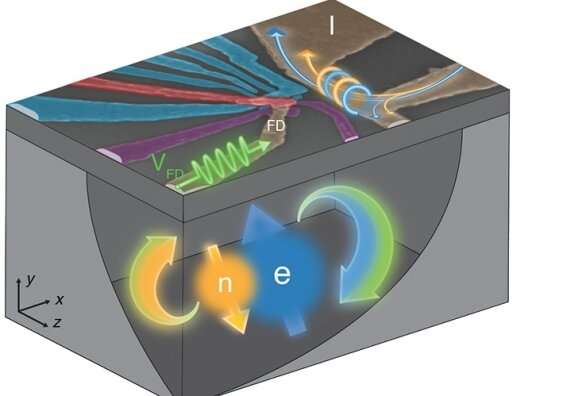

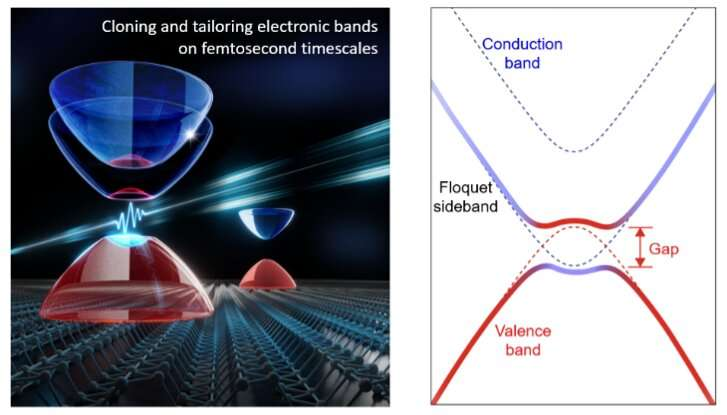

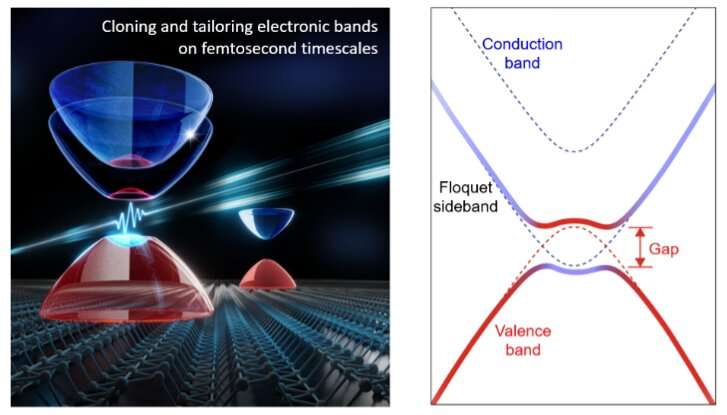

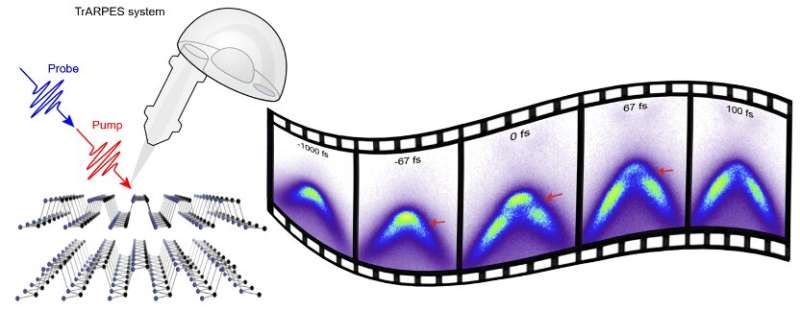

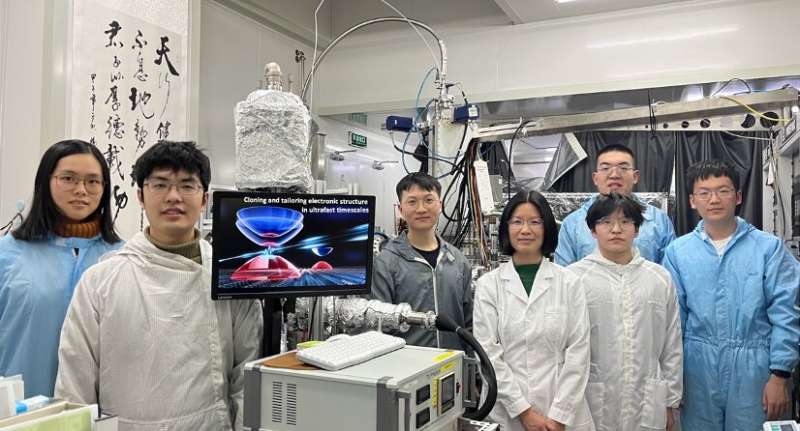

Researchers used lasers to “observe” the electron motion without the artifacts that plague other measurement techniques. They uncovered a rare quantum state of matter, never before observed in moiré superlattices. Understanding and controlling the quantum motion of electrons will allow scientists to build microelectronic devices of the future and robust qubits for quantum computing.

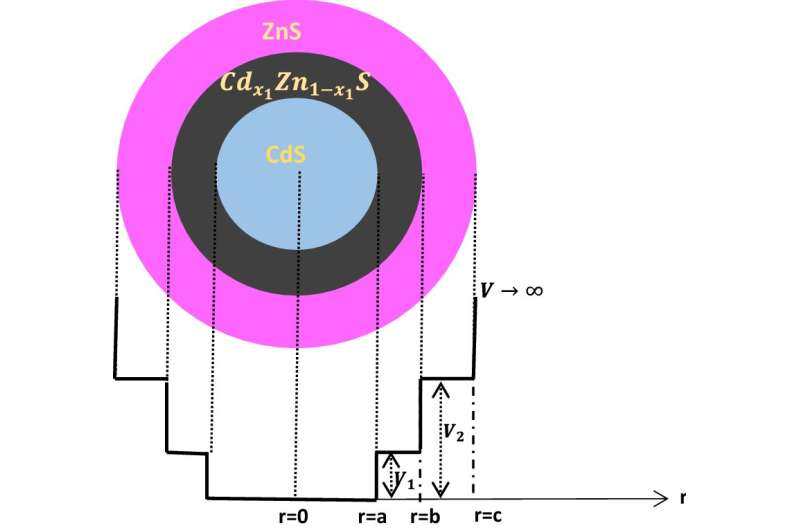

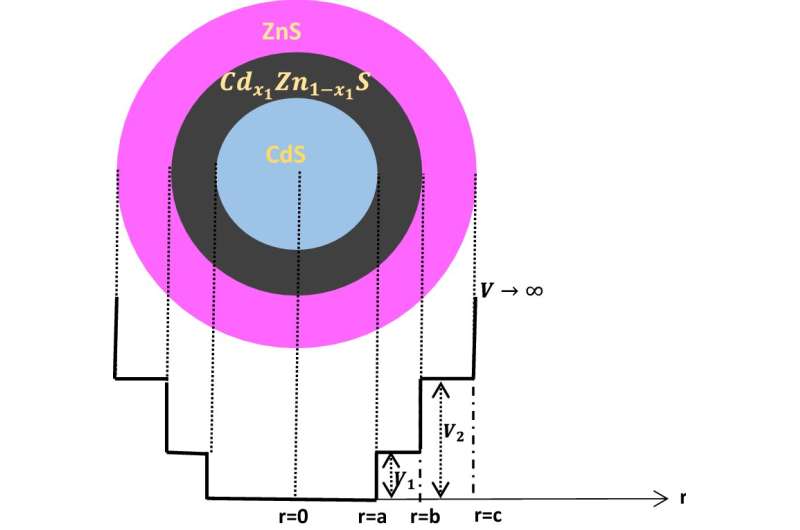

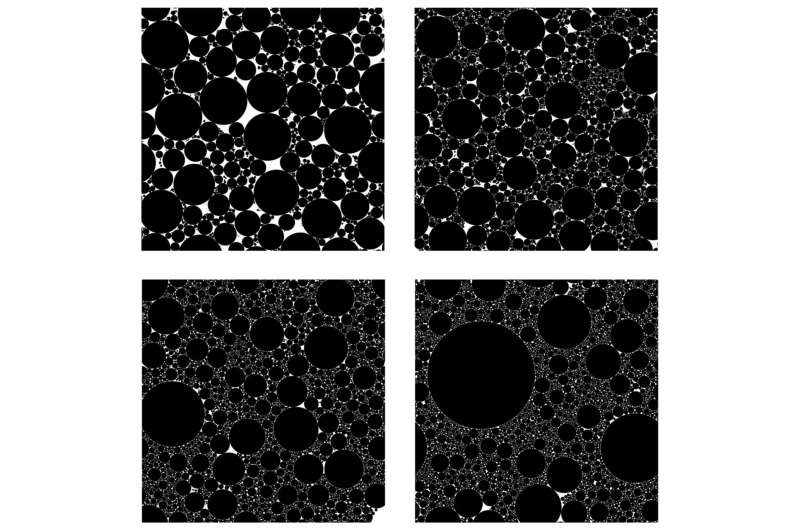

In solids, the energy levels that electrons occupy form energy bands. Moiré superlattices alter the atomic periodicity seen by the electrons and thus the energy bands. Moiré effects can lead to “flat” bands, in which the energy levels are squeezed together, causing electrons to lower their kinetic energy and thus to feel their mutual repulsion more strongly.

A team of researchers at Lawrence Berkeley National Laboratory (LBNL) used a novel optical technique to observe electron motion, while changing the number of electrons injected in the sample. When only one carrier per moiré unit cell was injected, the electrons were expected to move freely and thus conduct electricity. Instead, the sample became insulating. This result illustrates the Mott insulator state, in which electrons interact so strongly that they avoid being in the same cell. If every cell is occupied, then the electrons stop moving.

The real surprise came when fewer electrons were injected so that only one half or one third of the cells were occupied. At these low densities, scientists expected the electrons to feel their presence less and have high mobility. However, the sample turned out an insulator. In WSe2/WS2, electrons interact so strongly that they even avoid sitting on neighboring sites. This rare phenomenon is known as Wigner electron crystal.

LBNL researchers also demonstrated that in WSe2/WS2, light with appropriate polarization interacts with spin-up and spin-down electrons separately, making it possible to selectively change the energy of electrons based on their spin. In doing so, they observed spin excitations persisting orders of magnitude longer than charge excitations. This opens the door for the future investigation of exotic spin states such as quantum spin liquidity.

Related research was previously published in 2020 in the journal Nature.

More information: Emma C. Regan et al, Mott and generalized Wigner crystal states in WSe2/WS2 moiré superlattices, Nature (2020). DOI: 10.1038/s41586-020-2092-4

Journal information: Nature

Provided by US Department of Energy