Researchers from North Carolina State University and the University of North Carolina at Chapel Hill used chiral phonons to convert wasted heat into spin information—without needing magnetic materials. The finding could lead to new classes of less expensive, energy-efficient spintronic devices for use in applications ranging from computational memory to power grids.

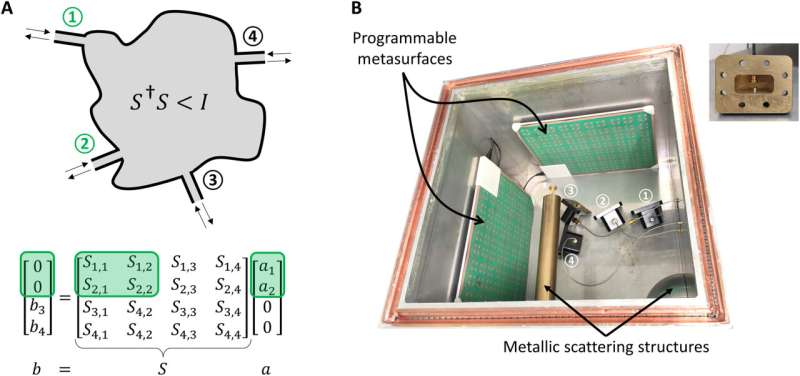

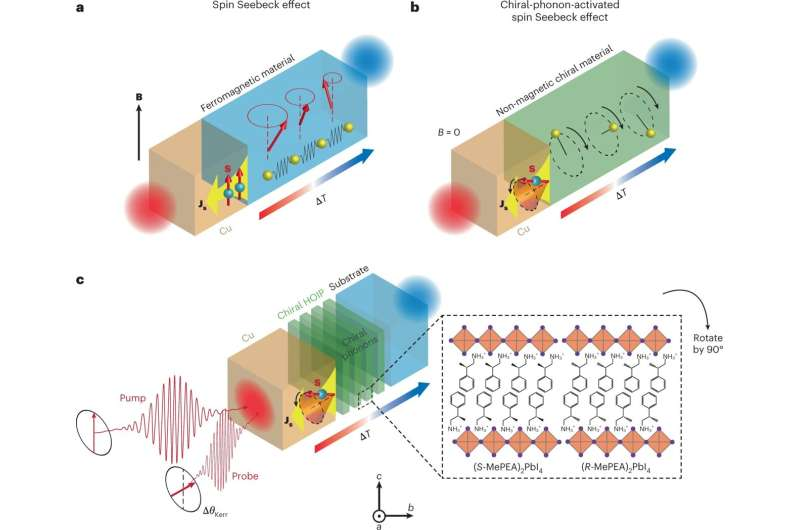

Spintronic devices are electronic devices that harness the spin of an electron, rather than its charge, to create current used for data storage, communication, and computing. Spin caloritronic devices—so-called because they utilize thermal energy to create spin current—are promising because they can convert waste heat into spin information, which makes them extremely energy efficient. However, current spin caloritronic devices must contain magnetic materials in order to create and control the electron’s spin.

“We used chiral phonons to create a spin current at room temperature without needing magnetic materials,” says Dali Sun, associate professor of physics and member of the Organic and Carbon Electronics Lab (ORaCEL) at North Carolina State University.

“By applying a thermal gradient to a material that contains chiral phonons, you can direct their angular momentum and create and control spin current,” says Jun Liu, associate professor of mechanical and aerospace engineering at NC State and ORaCEL member.

Both Liu and Sun are co-corresponding authors of the research, which appears in Nature Materials.

Chiral phonons are groups of atoms that move in a circular direction when excited by an energy source—in this case, heat. As the phonons move through a material, they propagate that circular motion, or angular momentum, through it. The angular momentum serves as the source of spin, and the chirality dictates the direction of the spin.

“Chiral materials are materials that cannot be superimposed on their mirror image,” Sun says. “Think of your right and left hands—they are chiral. You can’t put a left-handed glove on a right hand, or vice versa. This ‘handedness’ is what allows us to control the spin direction, which is important if you want to use these devices for memory storage.”

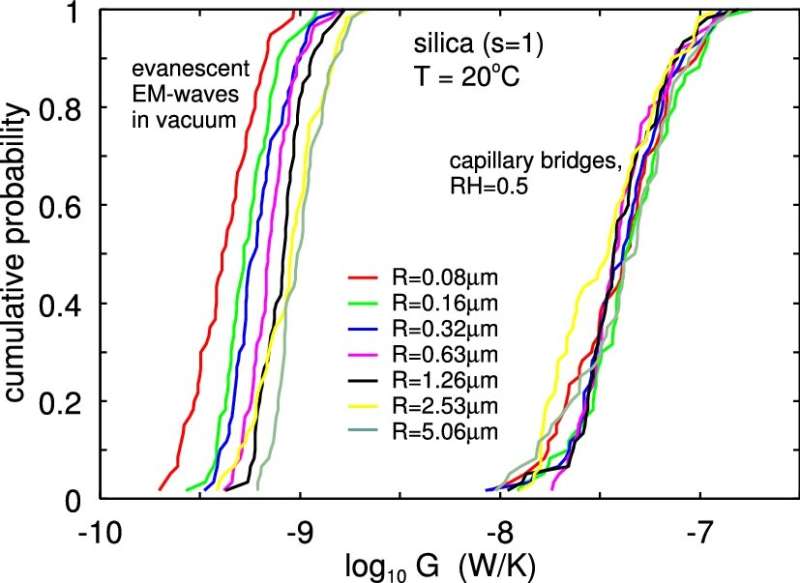

The researchers demonstrated chiral phonon-generated spin currents in a two-dimensional layered hybrid organic-inorganic perovskite by using a thermal gradient to introduce heat to the system.

“A gradient is needed because temperature difference in the material—from hot to cold—drives the motion of the chiral phonons through it,” says Liu. “The thermal gradient also allows us to use captured waste heat to generate spin current.”

The researchers hope that the work will lead to spintronic devices that are cheaper to produce and can be used in a wider variety of applications.

“Eliminating the need for magnetism in these devices means you’re opening the door wide in terms of access to potential materials,” Liu says. “And that also means increased cost-effectiveness.”

“Using waste heat rather than electric signals to generate spin current makes the system energy efficient—and the devices can operate at room temperature,” Sun says. “This could lead to a much wider variety of spintronic devices than we currently have available.”

More information: Lifa Zhang, Chiral-phonon-activated spin Seebeck effect, Nature Materials (2023). DOI: 10.1038/s41563-023-01473-9. www.nature.com/articles/s41563-023-01473-9

Journal information: Nature Materials

Provided by North Carolina State University

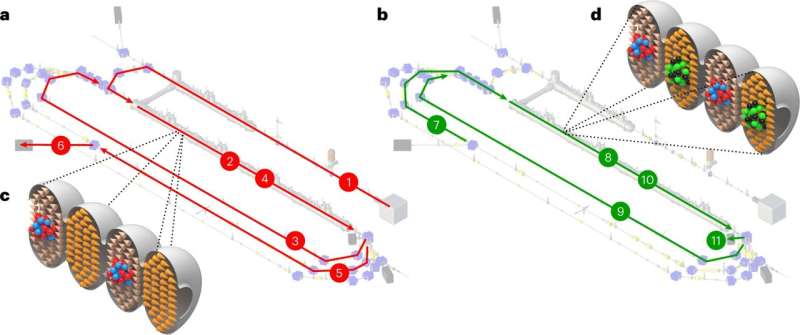

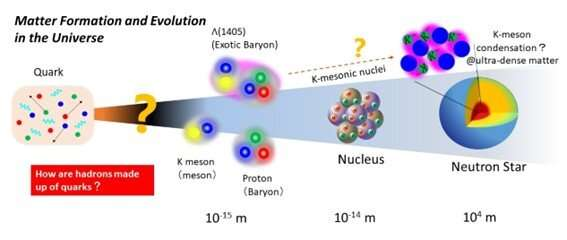

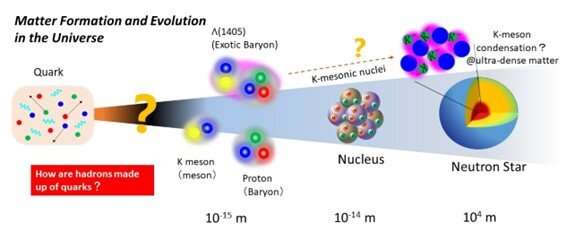

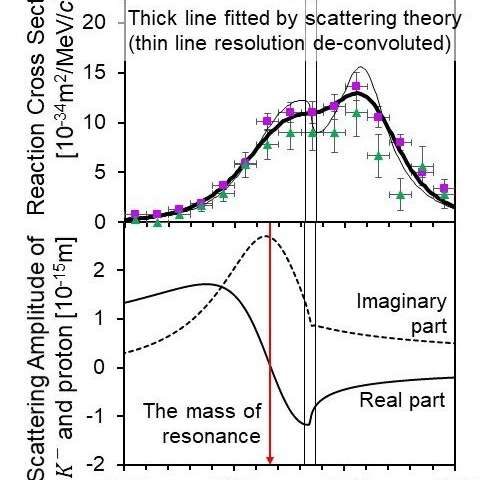

(Top) Measured reaction cross-section. The horizontal axis is the K– and proton collision recoil energy converted into a mass value. Large reaction events occur at mass values lower than the sum of the K– and proton masses, which itself suggests the existence of Λ(1405). The measured data were reproduced by scattering theory (solid lines). (Bottom) Distribution of K– and proton scattering amplitudes. When squared, these correspond to the reaction cross-section, and are generally complex numbers. The calculated values match with the measured data. When the real part (solid line) crosses 0, the value of the imaginary part reaches its maximum value. This is a typical distribution for a resonance state, and determines the complex mass. The arrows indicate the real part. Credit: 2023, Hiroyuki Noumi, Pole position of Λ(1405) measured in d(K^-,n)πΣ reactions, Physics Letters B

(Top) Measured reaction cross-section. The horizontal axis is the K– and proton collision recoil energy converted into a mass value. Large reaction events occur at mass values lower than the sum of the K– and proton masses, which itself suggests the existence of Λ(1405). The measured data were reproduced by scattering theory (solid lines). (Bottom) Distribution of K– and proton scattering amplitudes. When squared, these correspond to the reaction cross-section, and are generally complex numbers. The calculated values match with the measured data. When the real part (solid line) crosses 0, the value of the imaginary part reaches its maximum value. This is a typical distribution for a resonance state, and determines the complex mass. The arrows indicate the real part. Credit: 2023, Hiroyuki Noumi, Pole position of Λ(1405) measured in d(K^-,n)πΣ reactions, Physics Letters B Schematic illustration of the reaction used to synthesize Λ(1405) by fusing a K– (green circle) with a proton (dark blue circle), which takes place inside a deuteron nucleus. Credit: Hiroyuki Noumi

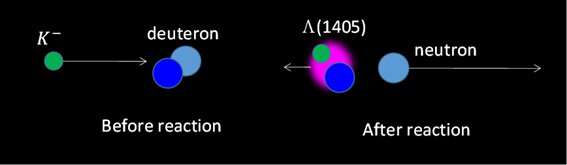

Schematic illustration of the reaction used to synthesize Λ(1405) by fusing a K– (green circle) with a proton (dark blue circle), which takes place inside a deuteron nucleus. Credit: Hiroyuki Noumi