Quantum theory predicts the existence of so-called tripartite-entangled states, in which three quantum particles are related in a way that has no counterpart in classical physics. Theoretical physicists would like to understand how well new theories, alternatives to quantum theory, might be able to reproduce the behavior of these states.

John Clauser, Alain Aspect and Anton Zeilinger, whose work was recently recognized by the Nobel Committee, have experimentally proven Bell’s theorem, showing that no local hidden-variable alternative to quantum theory can reproduce this behavior. In other words, they showed that quantum correlations are nonlocal.

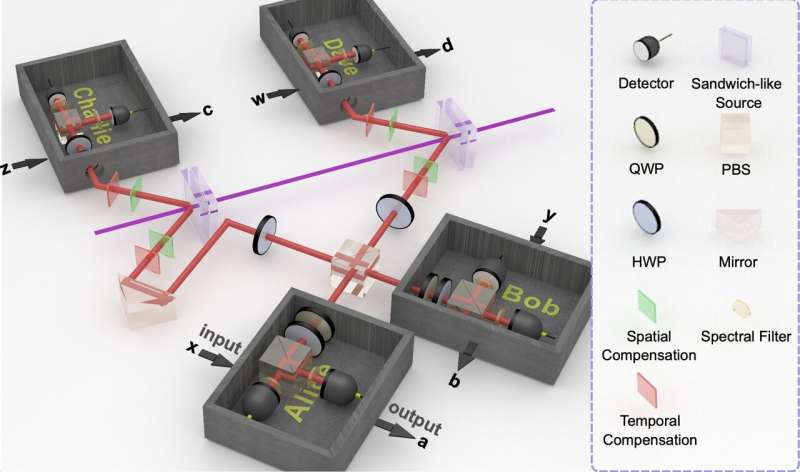

Researchers at the University of Science and Technology of China, Institute of Photonic Sciences, Università della Svizzera Italiana and Perimeter Institute of Theoretical Physics have recently carried out an experimental study generalizing these findings, by considering new potential theories. Their findings, published in Physical Review Letters, suggest that the correlations achieved by the tripartite-entangled state used in their experiment cannot be explained by an hypothetical theory involving a generalization of bipartite entanglement, called “exotic sources of two particles,” in addition to a local hidden-variable theory.

“The main objective of our study was to prove that the behavior of a three particle quantum source (e.g., a source of three photons) cannot be reproduced by any new hypothetical theory (replacing quantum theory, yet to be discovered) which only involves exotic pairs of two particle described by new physical laws’ and a local hidden variable model,” Marc-Olivier Renou, one of the authors of the paper, told Phys.org.

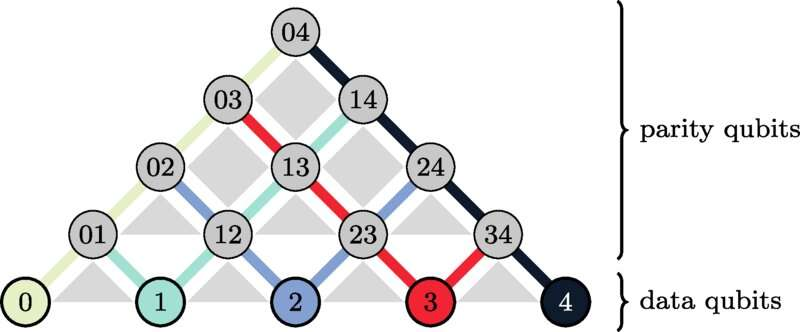

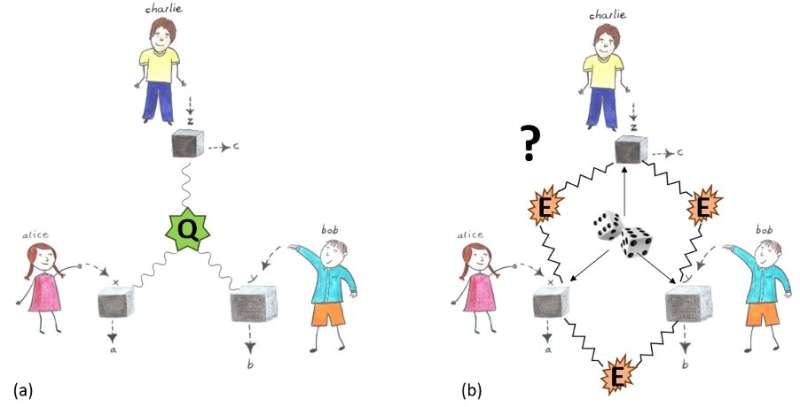

Gaël Massé, a second author, explains: “To do this, we used the idea contained in the ‘inflation technique,’ invented by Elie Wolfe, one of our coauthor. If we imagine a pair of two particles described by new physical laws, then even if we have no idea how to describe them we can still create a copy of this pair and make all the particles interact together in a new way. While this technique seems elementary, it has often proved to be a very powerful tool to address theoretical abstract concepts.”

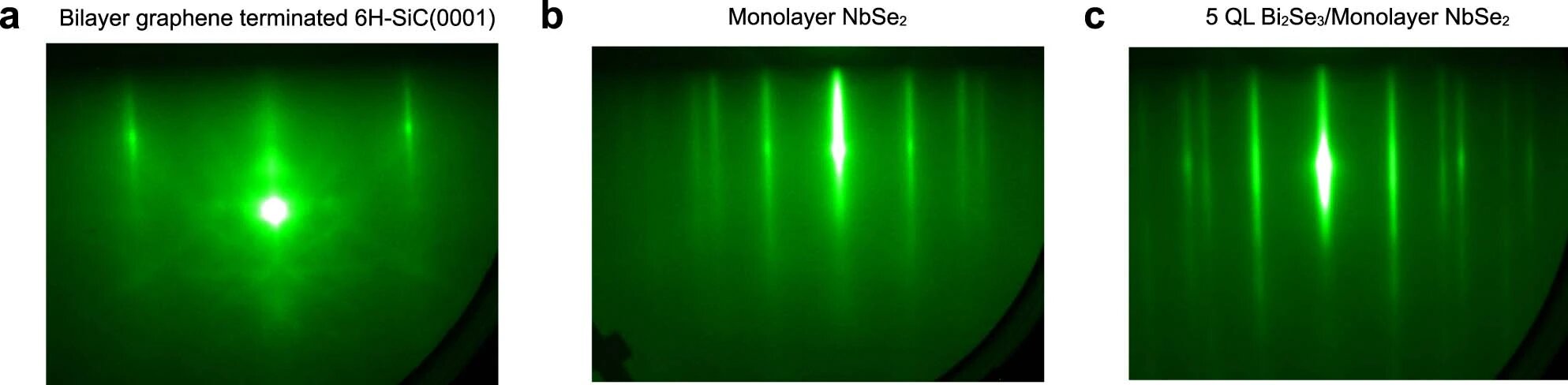

In their paper, the researchers first derived a new device-independent witness that could falsify causal theories with bipartite nonclassical resources. Then, through a lab experiment performed by Huan Cao and Chao Zhang, they showed that some tripartite-entangled state (called the “GHZ state”) could obtain, in practice, correlations that violate this witness.

“Using a high-performance photonic GHZ3 state with fidelities of 0.9741±0.002, we provide a clear experimental violation of that witness by more than 26.3 standard deviations, under the locality and fair sampling assumption,” the team explained in their paper. “We generalize our Letter to the |GHZ4⟩ state, obtaining correlations that cannot be explained by any causal theory limited to tripartite nonclassical common causes assisted with unlimited shared randomness.”

The recent work is a generalization of Bell’s theorem. Its most remarkable achievement is that it reaches beyond what physicists previously thought was possible in constraining potential alternative theories to quantum theory.

“Bell ruled out the possibility that quantum correlations can be explained by a local hidden variable model (i.e., shared randomness),” Xavier Coiteux-Roy, a coauthor of the study, explains. “We went a bit further, by proving that even if you add ‘bipartite exotic sources’ in your theory, it still doesn’t work. In fact, we generalized the result, showing that if you add tripartite, quadripartite, and other exotic sources, it still doesn’t work. You really need to involve N-partite exotic sources for any N, whatever high it is, as is done by quantum theory.” He concludes, “Note that experiment has imperfections, called loopholes. Realizing an experiment without these loopholes, in particular the post-selection loophole, is a great challenge for experimentalists for the next years.”

Based on their findings, the team concluded that nature’s correlations are genuinely multipartite nonlocal. The experiments they carried out so far allowed them to definitively exclude theories of bipartite and tripartite exotic sources, but they are now thinking of evaluating other alternatives to quantum theory.

“We are now trying to understand how far this idea can go, and how far we can exclude potential alternatives to quantum theory by just looking at concrete experimental results, without assuming that they are explained by quantum theory,” Renou added. “This might eventually allow us to exclude all potential alternatives to quantum theory.”

More information: Huan Cao et al, Experimental Demonstration that No Tripartite-Nonlocal Causal Theory Explains Nature’s Correlations, Physical Review Letters (2022). DOI: 10.1103/PhysRevLett.129.150402

Journal information: Physical Review Letters

© 2022 Science X Network