Manuel Endres, professor of physics at Caltech, specializes in finely controlling single atoms using devices known as optical tweezers. He and his colleagues use the tweezers, made of laser light, to manipulate individual atoms within an array of atoms to study fundamental properties of quantum systems. Their experiments have led to, among other advances, new techniques for erasing errors in simple quantum machines; a new device that could lead to the world’s most precise clocks; and a record-breaking quantum system controlling more than 6,000 individual atoms.

One nagging factor in this line of work has been the normal jiggling motion of atoms, which make the systems harder to control. Now, reporting in the journal Science, the team has flipped the problem on its head and used this atomic motion to encode quantum information.

“We show that atomic motion, which is typically treated as a source of unwanted noise in quantum systems, can be turned into a strength,” says Adam Shaw, a co-lead author on the study along with Pascal Scholl and Ran Finkelstein.

Shaw was formerly a graduate student at Caltech during these experiments and is now a postdoctoral scholar at Stanford University. Scholl served as a postdoc at Caltech and is now working at the quantum computing company Pasqal. Finkelstein held the Troesh Postdoctoral Prize Fellowship at Caltech and is now a professor at Tel Aviv University.

Ultimately, the experiment not only encoded quantum information in the motion of the atoms but also led to a state known as hyper-entanglement. In basic entanglement, two particles remain connected even when separated by vast distances. When researchers measure the particles’ states, they observe this correlation: For example, if one particle is in a state known as spin up (in which the orientation of the angular momentum is pointing up), the other will always be spin down.

In hyper-entanglement, two characteristics of a particle pair are correlated. As a simple analogy, this would be like a set of twins separated at birth having both the same names and same types of cars: The two traits are correlated between the twins.

In the new study, Endres and his team were able to hyper-entangle pairs of atoms such that their individual states of motion and their individual electronic states—their internal energy levels—were correlated among the atoms. What is more, this experimental demonstration implies that even more traits could be entangled at the same time.

“This allows us to encode more quantum information per atom,” Endres explains. “You get more entanglement with fewer resources.”

The experiment is the first demonstration of hyper-entanglement in massive particles, such as neutral atoms or ions (earlier demonstrations used photons).

Adam Shaw, Ivaylo Madjarov and Manuel Endres work on their laser-based apparatus at Caltech. Credit: Caltech

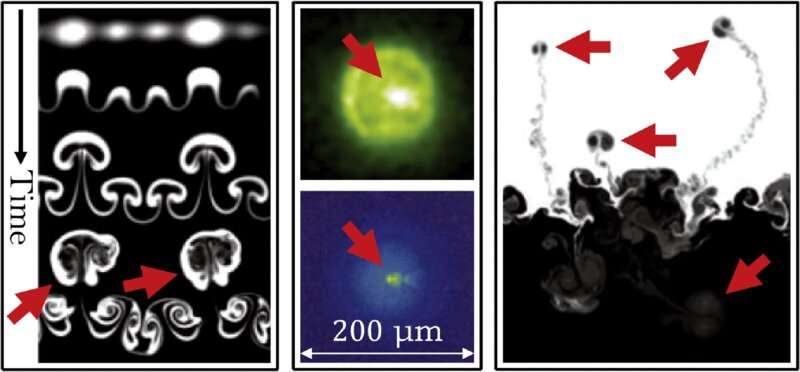

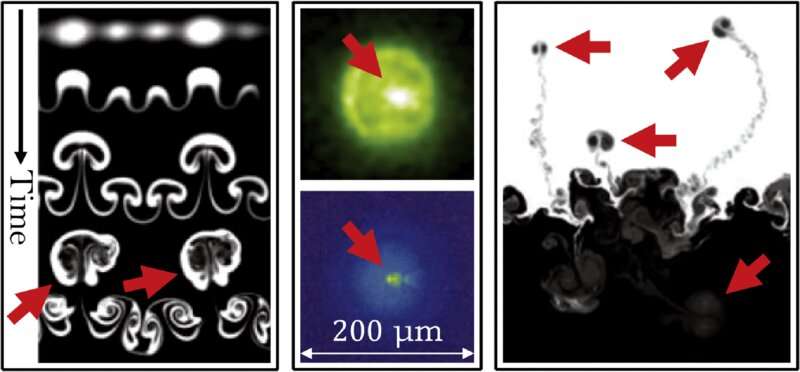

For these experiments, the team cooled down an array of individual alkaline-earth neutral atoms confined inside optical tweezers. They demonstrated a novel form of cooling via “detection and subsequent active correction of thermal motional excitations,” says Endres, which he compares to James Clerk Maxwell’s famous 1867 thought experiment invoking a demon that measures and sorts particles in a chamber. “We essentially measure the motion of each atom and apply an operation depending on the outcome, atom-by-atom, similar to Maxwell’s demon.”

The method, which outperformed the best-known laser cooling techniques, caused the atoms to come to nearly a complete standstill.

From there, the researchers induced the atoms to oscillate like a swinging pendulum, but with an amplitude of approximately100 nanometers, which is much smaller than the width of a human hair. They were able to excite the atoms into two distinct oscillations simultaneously, causing the motion to be in a state of superposition. Superposition is a quantum state in which a particle exhibits opposite traits simultaneously, like a particle’s spin being both up and down at the same time.

“You can think of an atom moving in this superposition state like a kid on a swing who starts getting pushed by two parents on opposite sides, but simultaneously,” Endres says. “In our everyday world, this would certainly lead to a parental conflict; in the quantum world, we can remarkably make use of this.”

They then entangled the individual, swinging atoms to partner atoms, creating a correlated state of motion over several micrometers of distance. After the atoms were entangled, the team then hyper-entangled them in such a way that both the motion and the electronic states of the atoms were correlated.

“Basically, the goal here was to push the boundaries on how much we could control these atoms,” Endres says. “We are essentially building a toolbox: We knew how to control the electrons within an atom, and we now learned how to control the external motion of the atom as a whole. It’s like an atom toy that you have fully mastered.”

The findings could lead to new ways to perform quantum computing as well as quantum simulations designed to probe fundamental questions in physics. “Motional states could become a powerful resource for quantum technology, from computing to simulation to precision measurements,” Endres says.

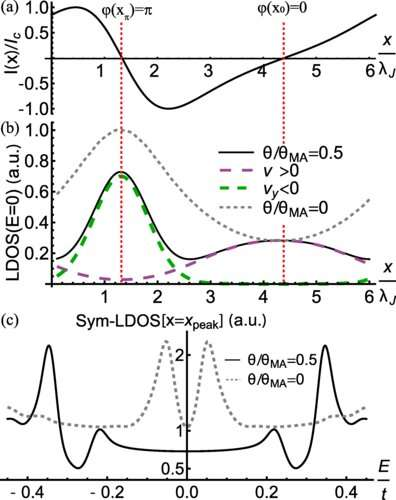

![(a) Interlayer current in the presence of an in-plane field [Fig. 1] over one period l = 6λJ of the Josephson vortex lattice with period. (b) LDOS at zero energy at the top layer. Green and purple lines show contributions of low-energy modes with different chirality [see Fig. 3].(c) Symmetrized energy dependence of LDOS at the peak position: for finite θ LDOS is constant below within the intra-domain gap. Credit: Physical Review Letters (2023). DOI: 10.1103/PhysRevLett.130.186001 The exciting possibilities of tiny, twisted superconductors](https://scx1.b-cdn.net/csz/news/800a/2023/the-exciting-possibili.jpg)