A multinational research team, including engineers from the University of Cambridge and Zhejiang University, has developed a breakthrough in miniaturized spectrometer technology that could dramatically expand the accessibility and functionality of spectral imaging in everyday devices.

The study, titled “Stress-engineered ultra-broadband spectrometer,” published in the journal Science Advances, describes a novel, low-cost spectrometer platform built from programmable plastic materials rather than conventional glass.

These innovative devices operate across the full visible and short-wave infrared (SWIR) range—spanning 400 to 1,600 nanometers—which opens up a wealth of possibilities for real-world applications.

Traditionally, spectrometers—the tools that analyze the composition of light to detect materials or environmental conditions—have been bulky, expensive and difficult to mass-produce. Most are also limited to narrow spectral bands or rely on multiple specialized components to cover a broader range.

The new approach sidesteps these issues with a lightweight, scalable alternative that leverages recent advances in polymer science and computational optics.

A plastic revolution in optics

The team was inspired by the evolution of smartphone cameras, which now rely heavily on plastic optical components to achieve high performance in ultra-compact formats. Applying the same principle to spectrometer design, the researchers used transparent shape memory epoxies (SMPs) to engineer dispersive optical elements—components that separate light into its spectral components.

What makes this approach truly innovative is the use of internal stress to tailor the optical properties of the plastic. Normally, stress patterns that develop during the manufacture of plastic objects are uncontrolled and unstable. However, SMPs can be mechanically stretched at elevated temperatures to “program” precise and stable stress distributions into the material. These stresses create birefringence—an optical effect where light is split according to its wavelength.

“By shaping the internal stress within the polymer, we are able to engineer spectral behavior with high repeatability and tunability, something that’s incredibly difficult to achieve with conventional optics,” said Gongyuan Zhang from Zhejiang University, the lead author of the study.

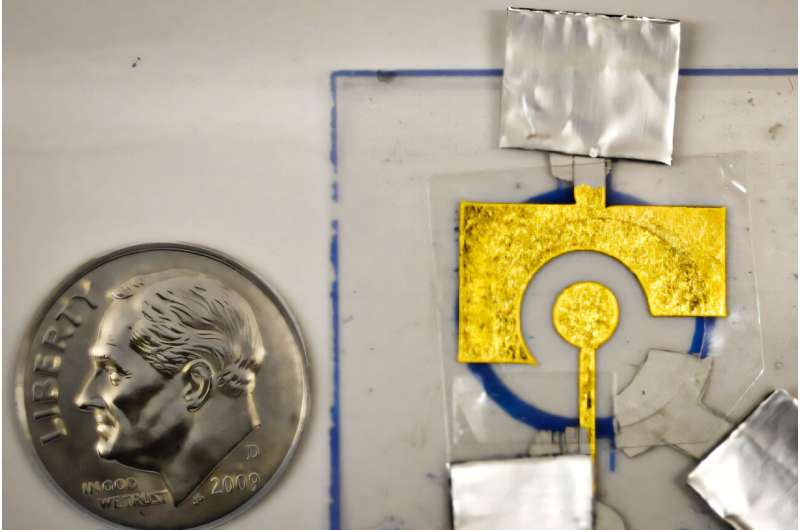

The resulting films act as spectral filters, encoding information that can be read by standard CMOS image sensors. With the aid of computational spectral reconstruction algorithms, these planar components can be turned into powerful, compact spectrometers.

From lab bench to consumer tech

One of the major achievements of this work is demonstrating that these stress-engineered films can be fabricated in a single step, without the need for lithography or expensive nanofabrication. This makes the devices ideal for mass production and integration into consumer electronics, such as mobile phones, wearable health monitors, and even food quality testers.

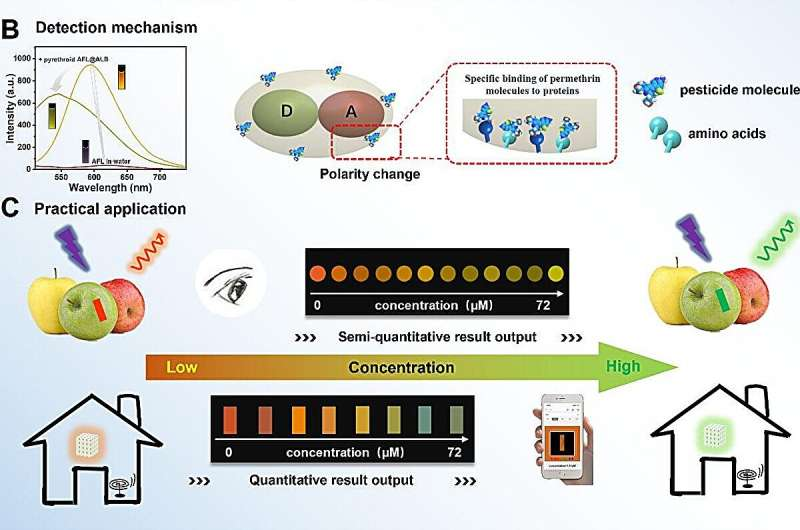

“We’ve shown that you can use programmable plastics to cover a much broader range of the spectrum than typical miniaturized systems—right into the SWIR,” said Professor Zongyin Yang, lead author from Zhejiang University. “That’s really important for applications like agricultural monitoring, mineral exploration, and medical diagnostics.”

The spectrometers are also highly compact, and the team successfully integrated them into a line-scanning spectral imaging system—suggesting their suitability for hyperspectral imaging in portable form. By linearly varying the stress across the length of the film, the team could create gradient filters capable of scanning a scene one slice at a time, collecting rich spectral data in the process.

A platform for the future

This work represents more than a technical breakthrough; it lays the foundation for a new class of ultra-portable, broadband sensing devices that could transform industrial and consumer markets.

The researchers point to several likely applications: detecting pollutants in water or air, verifying the authenticity of drugs, monitoring blood sugar non-invasively, and even sorting recyclable materials in real-time.

By eliminating the traditional trade-offs between size, cost and spectral range, the platform could democratize access to high-quality spectral data. It also aligns with broader research efforts at Cambridge’s Department of Engineering in computational photonics and sustainable sensing technologies, areas aiming to push intelligence and functionality into smaller, more accessible formats.

“This work shows how mechanical design principles can be used to reshape photonic functionality,” said co-author Professor Tawfique Hasan from Cambridge’s Department of Engineering.

“By embedding stress into transparent polymers, we have created a new class of dispersive optics that are not only lightweight and scalable but also adaptable across a wide spectral range. This level of flexibility is very difficult to achieve with traditional optics relying on static, lithographically defined structures.”